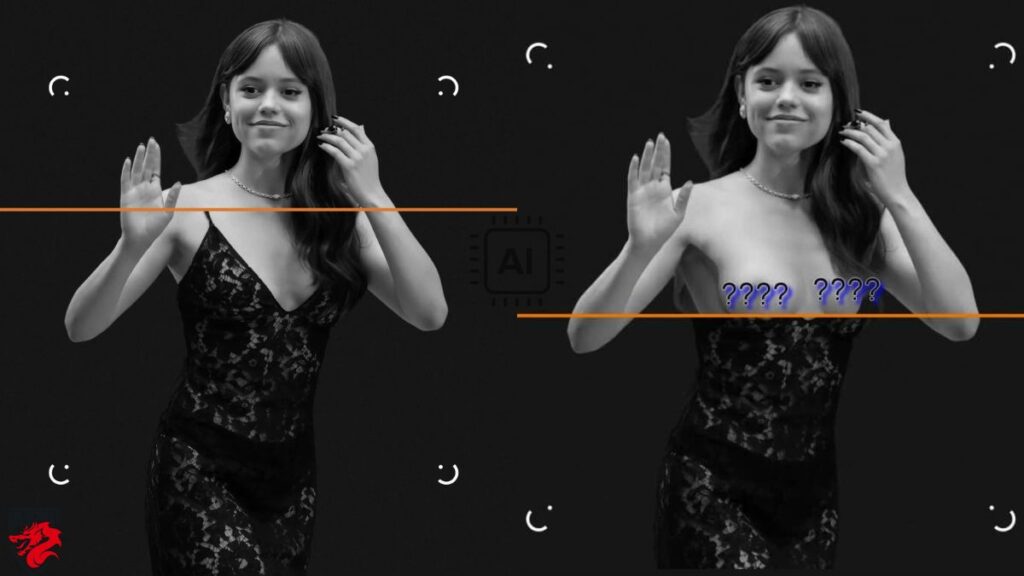

Artificial intelligence has revolutionized industries like healthcare and entertainment, but its darker side has emerged through AI-powered chatbots that generate explicit images, raising serious privacy and ethical concerns. These bots, often used without consent, are creating nude images of individuals, sparking what experts call a “nightmarish scenario.” Telegram, a popular platform, has become a hub for such tools, allowing users to easily upload images and request sexually explicit modifications. This article explores how this technology works, its psychological toll on victims, the legal efforts to control it, and the responsibility of platforms enabling its use.

How AI-Generated Explicit Content Works?

AI technology, specifically deep learning models, has evolved to the point where it can easily manipulate images. This has paved the way for deepfake technology to be used in creating pornographic material. Here’s how it works:

- Deep Learning Models: These models analyze millions of images to understand and recreate visual elements such as clothing and skin. AI can now generate highly realistic fake images by applying learned patterns to manipulate the original image.

- Chatbot Accessibility: On platforms like Telegram, users interact with AI-powered chatbots. These bots offer services such as:

- “Clothing removal” from photos

- Generation of X-rated images involving the person in the picture

- Ease of Use: What’s alarming is how easy these bots make it for anyone to create explicit content. With just a few clicks, users can upload an image and receive a manipulated result, often within minutes.

According to reports from Wired, approximately 4 million people are using these AI bots every month, and there are currently around 50 such bots available on Telegram. These numbers show the alarming scale at which this technology is being misused.

Psychological Impact on Victims:

One of the most troubling aspects of AI-generated explicit images is the profound psychological trauma experienced by the victims. The impact goes beyond the initial violation of having an image manipulated without consent. Victims often face:

- Humiliation and Shame: Being the subject of explicit deepfakes can lead to feelings of intense embarrassment and shame, especially when the images are shared widely.

- Fear and Anxiety: Victims live in constant fear that the manipulated images could resurface or be seen by people they know. This creates a sense of helplessness, as it’s often difficult to control the spread of these images once they’ve been shared online.

- Long-Term Mental Health Issues: Psychological trauma from such violations can lead to:

- Depression

- Anxiety disorders

- Trust issues in personal relationships

Emma Pickering, head of technology-facilitated abuse at the UK-based organization Refuge, emphasizes that this kind of abuse is becoming increasingly common, especially in intimate relationships. Many perpetrators face little to no consequences, further exacerbating the trauma for victims.

The Legal and Ethical Battle: A Struggle to Keep Up

The rise of deepfake pornography has sparked a global conversation about how to regulate and control the spread of this harmful content. However, the law often struggles to keep pace with advancements in technology, making it difficult to establish clear regulations. Here are key developments in the legal landscape:

- San Francisco’s Legal Action: In August 2024, the San Francisco Attorney’s Office filed lawsuits against over a dozen “undress AI” websites that offered similar AI-based services. This is part of a growing movement to regulate deepfake pornography, but challenges remain in enforcing such laws globally.

- Bans on Non-Consensual Deepfakes: Many states in the U.S. have implemented bans on non-consensual deepfake pornography. However, platforms like Telegram operate in a gray area due to vague terms of service, making it unclear whether the creation or distribution of such images is explicitly prohibited.

- Challenges in Tracking AI Bots: Elena Michale, co-founder of the advocacy group #NotYourPorn, explains that it is difficult to monitor AI bots that create explicit content. These bots can quickly disappear and reappear under new names, making it hard to hold their creators accountable. As a result, many victims are left to fend for themselves, trying to remove harmful content with little support from the platforms hosting these bots.

In a broader context, platforms like Telegram need to tighten their policies and work proactively with authorities to prevent misuse of AI technology.

The Role of Platforms: Accountability and Responsibility:

Much of the responsibility for controlling the spread of explicit AI-generated images falls on the platforms hosting these services. Telegram, in particular, has come under scrutiny for its role in enabling the creation and distribution of deepfake pornography. Here’s how platforms like Telegram contribute to the problem:

- Bot Hosting and Search Functionality: Telegram provides the infrastructure for these AI bots to operate, allowing users to search for and interact with bots that can create explicit content. Without tighter regulation, these tools will continue to thrive on such platforms.

- Failure to Act Proactively: Experts like Henry Ajder, a leading researcher in the deepfake space, argue that platforms need to act proactively rather than reactively. Many of these bots are removed only after media reports or public outcry, but the creators often bring them back under new names within days.

- Weak Terms of Service: Telegram’s terms of service regarding explicit content remain vague, making it difficult to enforce bans on deepfake pornography effectively. Kate Ruane, director of the Free Expression Project at the Center for Democracy and Technology, pointed out that it’s unclear whether non-consensual explicit content is even prohibited under Telegram’s current policies.

The burden should not fall on the victims to report and remove harmful content. Instead, platforms need to put better systems in place to prevent the creation and spread of AI-generated explicit images.

The Urgent Need for Comprehensive Solutions:

Addressing the issue of AI-generated explicit content requires a multi-faceted approach involving:

- Stricter Regulation: Governments and international bodies must work together to create laws that regulate the use of AI in creating explicit content. These laws should be designed to hold not just the creators of deepfakes accountable, but also the platforms that host them.

- Improved Platform Policies: Messaging platforms like Telegram must update their terms of service to include explicit prohibitions against the creation and distribution of non-consensual pornography. Additionally, these platforms should invest in AI and machine learning tools that can automatically detect and remove such content.

- Support for Victims: It is crucial to provide psychological and legal support to victims of deepfake pornography. This includes offering easier methods for victims to report and remove explicit images and ensuring that perpetrators face real consequences.

- Raising Awareness: Public awareness campaigns can help educate people about the risks of AI-generated explicit content, teaching individuals how to protect their digital images and offering advice on what to do if they become victims of deepfake pornography.

Conclusion:

The rise of AI-generated explicit images is a deeply troubling trend that requires immediate attention. While technology has advanced at an unprecedented pace, the laws and policies governing its use have lagged behind. This gap has allowed harmful tools like deepfake chatbots to flourish, creating untold damage to the lives of many, particularly women and young girls.

To combat this issue, we need stronger laws, stricter platform policies, and a concerted effort to raise public awareness about the dangers of AI-generated explicit content. Most importantly, we must hold platforms and creators accountable for the harm their technology can cause. As we continue to embrace AI, it’s essential to ensure that its potential is used for good, not for exploitation.

My name is Augustus, and I am dedicated to providing clear, ethical, and current information about AI-generated imagery. At Undress AI Life, my mission is to educate and inform on privacy and digital rights, helping users navigate the complexities of digital imagery responsibly and safely.